import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

import seaborn as sns

import folium

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

from sklearn.cluster import KMeans, Birch

from sklearn.mixture import GaussianMixture

from sklearn.metrics import silhouette_score, make_scorer

from sklearn.model_selection import RandomizedSearchCV

from collections import Counter

pd.set_option('display.max_columns', None)

import warnings

warnings.filterwarnings("ignore")Donor Segmentation Using an Ensemble of Clustering Algorithms - Hyperparameter Tuning

An artificial dataset of charity donors spanning 3 years is preprocessed for clustering. Preprocessing steps includes feature selection by correlation analysis and dimensionality reduction using PCA. A random search is used to tune the hyperparameters of KMeans, GMM and BIRCH models for k=4 and k=5 clusters. The models are combined into an ensemble with hard voting to cluster donors into groups with similar donation patterns. The results of each clustering experiment are geographically presented through interactive maps and judged through visual inspection and comparing silhouette scores.

This is a notebook directly from one of my projects, and so this post tells less of a story than the others. I think this notebook is better viewed as an exposition of some voluntary data analysis work, and guidance to anyone hoping to use python for donor segmentation in a similar way.

The goal of this project is to segment recorded donors into classes such as ‘Loyal Supporter’ or ‘One-Off Donor’ by viewing the problem as one of unsupervised ML and applying clustering algorithms to a dataset of donation history.

Importantly, geographic features such as latitude and longitude are not included in the dataset on which clustering is applied. Thus we shouldn’t expect to see (and indeed don’t see) any geographic pattern in the clusters.

The artificial dataset used in this post is far more noisy than the real dataset used for Emmanuel House. Thus cleanly seperated and interpretable clusters are harder to achieve here.

The purpose of this post is not to achieve good clustering results on the artificial dataset. The purpose is to illustrate, using the artificial dataset, my method for tuning the model that performed well on the real dataset.

def drop_column(df, col_name):

if col_name in df.columns:

df = df.drop(columns=[col_name])

return dfdf = pd.read_csv('../eh3/data.csv')df['Transactions_DateOfFirstGift'] = pd.to_datetime(df['Transactions_DateOfFirstGift'])

df['Transactions_DateOfLastGift'] = pd.to_datetime(df['Transactions_DateOfLastGift'])FEATURES

unscaled_features = ['Transactions_LifetimeGiftsAmount',

'Transactions_LifetimeGiftsNumber',

'Transactions_AverageGiftAmount',

'Transactions_Months1To12GiftsAmount',

'Transactions_Months1To12GiftsNumber',

'Transactions_Months13To24GiftsAmount',

'Transactions_Months13To24GiftsNumber',

'Transactions_Months25To36GiftsAmount',

'Transactions_Months25To36GiftsNumber',

'Transactions_FirstGiftAmount',

'Transactions_LastGiftAmount',

'monthsSinceFirstDonation',

'monthsSinceLastDonation',

'activeMonths',

'Transactions_HighestGiftAmount',

'Transactions_LowestGiftAmount',

]

scaled_features = ['DonationFrequency',

'DonationFrequencyActive',]

binary_features = []

features = unscaled_features + scaled_features + binary_featureslen(features)18PREPROCESSING

CORRELATION ANALYSIS

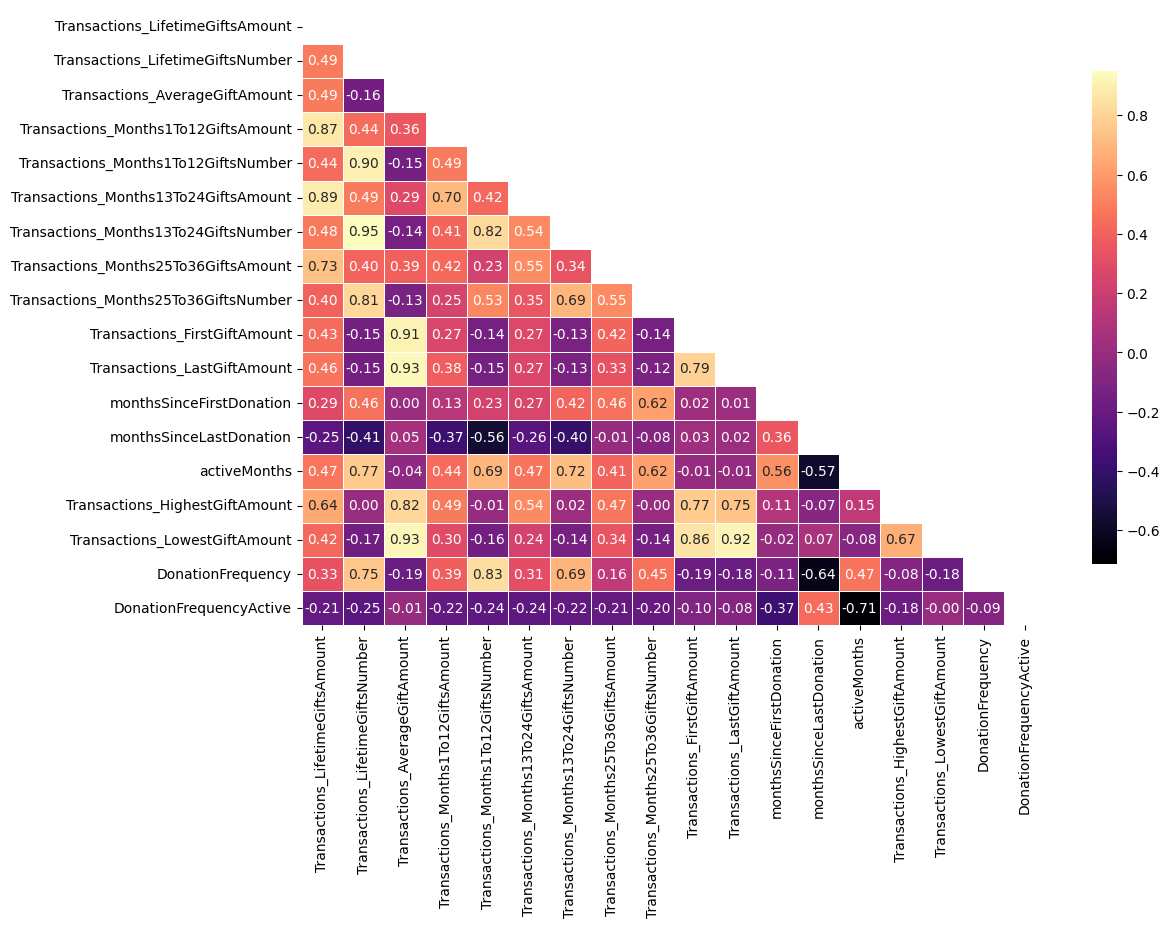

plt.figure(figsize=(12, 8))

# Compute the correlation matrix

corr = df[features].corr(numeric_only=True)

# Generate a mask for the upper triangle

mask = np.triu(np.ones_like(corr, dtype=bool), k=0)

# Draw the heatmap with the mask

sns.heatmap(corr, mask=mask, annot=True, fmt=".2f", cmap='magma',

cbar_kws={"shrink": .8}, linewidths=.5)

# Rotate the y labels (ticks) to horizontal

plt.yticks(rotation=0)

plt.show()

print('15 most highly correlated pairs of features (in absolute value):\n')

print(corr.abs().where(~mask).stack().sort_values(ascending=False).head(15))15 most highly correlated pairs of features (in absolute value):

Transactions_Months13To24GiftsNumber Transactions_LifetimeGiftsNumber 0.949797

Transactions_LastGiftAmount Transactions_AverageGiftAmount 0.934479

Transactions_LowestGiftAmount Transactions_AverageGiftAmount 0.928709

Transactions_LastGiftAmount 0.915917

Transactions_FirstGiftAmount Transactions_AverageGiftAmount 0.905685

Transactions_Months1To12GiftsNumber Transactions_LifetimeGiftsNumber 0.899897

Transactions_Months13To24GiftsAmount Transactions_LifetimeGiftsAmount 0.890478

Transactions_Months1To12GiftsAmount Transactions_LifetimeGiftsAmount 0.868661

Transactions_LowestGiftAmount Transactions_FirstGiftAmount 0.855994

DonationFrequency Transactions_Months1To12GiftsNumber 0.834842

Transactions_Months13To24GiftsNumber Transactions_Months1To12GiftsNumber 0.823872

Transactions_HighestGiftAmount Transactions_AverageGiftAmount 0.816823

Transactions_Months25To36GiftsNumber Transactions_LifetimeGiftsNumber 0.811658

Transactions_LastGiftAmount Transactions_FirstGiftAmount 0.787498

Transactions_HighestGiftAmount Transactions_FirstGiftAmount 0.769152

dtype: float64NEW FEATURES AFTER REMOVAL

unscaled_features = ['Transactions_LifetimeGiftsAmount',

'Transactions_LifetimeGiftsNumber',

'Transactions_AverageGiftAmount',

'Transactions_Months25To36GiftsAmount',

'Transactions_FirstGiftAmount',

'monthsSinceFirstDonation',

'monthsSinceLastDonation',

'activeMonths',

]

scaled_features = ['DonationFrequency',

'DonationFrequencyActive',]

binary_features = []

features = unscaled_features + scaled_features + binary_featureslen(features)10TRANSFORMING, SCALING, PCA, OUTLIERS

Log transforming £ features

df_log = df[features].copy()

# Logarithmically scaling down features representing donation totals

# np.log1p is the function x |--> ln(1+x)

df_log['Transactions_Months1To12GiftsAmount'] = np.log1p(df['Transactions_Months1To12GiftsAmount'])

df_log['Transactions_Months13To24GiftsAmount'] = np.log1p(df['Transactions_Months13To24GiftsAmount'])

df_log['Transactions_Months25To36GiftsAmount'] = np.log1p(df['Transactions_Months25To36GiftsAmount'])

df_log['Transactions_FirstGiftAmount'] = np.log1p(df['Transactions_FirstGiftAmount'])

df_log['Transactions_LastGiftAmount'] = np.log1p(df['Transactions_LastGiftAmount'])

df_log['Transactions_HighestGiftAmount'] = np.log1p(df['Transactions_HighestGiftAmount'])

df_log['Transactions_LowestGiftAmount'] = np.log1p(df['Transactions_LowestGiftAmount'])

df_log['Transactions_LifetimeGiftsAmount'] = np.log1p(df['Transactions_LifetimeGiftsAmount'])

df_log['Transactions_AverageGiftAmount'] = np.log1p(df['Transactions_LifetimeGiftsAmount'])/df['Transactions_LifetimeGiftsNumber']df_log = df_log[features]df_log.sample(10)| Transactions_LifetimeGiftsAmount | Transactions_LifetimeGiftsNumber | Transactions_AverageGiftAmount | Transactions_Months25To36GiftsAmount | Transactions_FirstGiftAmount | monthsSinceFirstDonation | monthsSinceLastDonation | activeMonths | DonationFrequency | DonationFrequencyActive | |

|---|---|---|---|---|---|---|---|---|---|---|

| 391 | 4.418841 | 3 | 1.472947 | 3.419037 | 3.447763 | 27 | 3 | 25 | 0.107143 | 0.120000 |

| 305 | 5.931529 | 3 | 1.977176 | 4.624581 | 4.593401 | 29 | 17 | 13 | 0.100000 | 0.230769 |

| 485 | 4.115780 | 2 | 2.057890 | 0.000000 | 3.444257 | 15 | 15 | 1 | 0.125000 | 2.000000 |

| 533 | 4.565805 | 4 | 1.141451 | 3.477541 | 3.429137 | 27 | 4 | 24 | 0.142857 | 0.166667 |

| 467 | 6.584087 | 36 | 0.182891 | 5.380634 | 3.043093 | 36 | 1 | 36 | 0.972973 | 1.000000 |

| 143 | 4.389250 | 2 | 2.194625 | 0.000000 | 3.716738 | 14 | 14 | 1 | 0.133333 | 2.000000 |

| 147 | 3.741946 | 2 | 1.870973 | 0.000000 | 3.031099 | 6 | 6 | 1 | 0.285714 | 2.000000 |

| 667 | 4.145038 | 5 | 0.829008 | 3.088311 | 3.088311 | 27 | 3 | 25 | 0.178571 | 0.200000 |

| 623 | 5.312023 | 2 | 2.656012 | 4.632396 | 4.663722 | 27 | 27 | 1 | 0.071429 | 2.000000 |

| 97 | 5.546466 | 10 | 0.554647 | 4.467172 | 3.001714 | 31 | 1 | 31 | 0.312500 | 0.322581 |

StandardScaler

# Scaling non-binary features

scaler = StandardScaler()

df_scaled = pd.DataFrame(scaler.fit_transform(df[unscaled_features]), columns=unscaled_features)

df_concat = pd.concat([df_scaled, df[scaled_features], df[binary_features]], axis=1)PCA

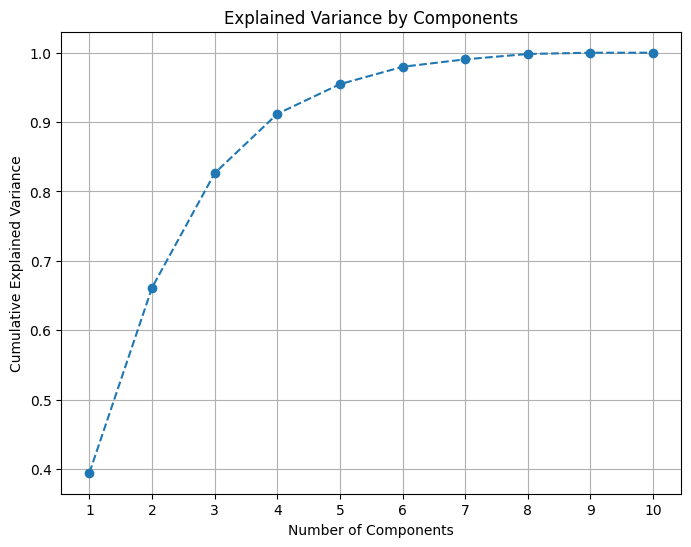

# Choosing n_components by producing an explained variance plot

pca = PCA()

df_pca = pca.fit_transform(df_concat)

# Calculate the cumulative explained variance

explained_variance = np.cumsum(pca.explained_variance_ratio_)

# Plot the elbow graph

plt.figure(figsize=(8, 6))

plt.plot(range(1, len(explained_variance) + 1), explained_variance, marker='o', linestyle='--')

plt.title('Explained Variance by Components')

plt.xlabel('Number of Components')

plt.ylabel('Cumulative Explained Variance')

# Add gridlines

plt.grid(True)

# Ensure non-fractional x labels

ax = plt.gca()

ax.xaxis.set_major_locator(ticker.MaxNLocator(integer=True))

plt.show()

# Apply PCA with chosen number of components

pca = PCA(n_components=7)

df_pca = pd.DataFrame(pca.fit_transform(df_concat))REMOVING OUTLIERS

We declare a donor to be an outlier if they have any of Transactions_LifetimeGiftsAmount, Transactions_AverageGiftAmount or Transactions_LifetimeGiftsNumber at more than 3 standard deviations above the mean.

Outliers are removed before clustering to improve interpretability of results.

# Define thresholds at which to cutoff outliers and remove such rows from the dataframe

amnt_thres = df['Transactions_LifetimeGiftsAmount'].mean() + 3*df['Transactions_LifetimeGiftsAmount'].std()

avg_thres = df['Transactions_AverageGiftAmount'].mean() + 3*df['Transactions_AverageGiftAmount'].std()

num_thres = df['Transactions_LifetimeGiftsNumber'].mean() + 3*df['Transactions_LifetimeGiftsNumber'].std()

mask = ~((df['Transactions_LifetimeGiftsAmount'] > amnt_thres) | (df['Transactions_AverageGiftAmount'] > avg_thres) | (df['Transactions_LifetimeGiftsNumber'] > num_thres))

df_pca_without_outliers = df_pca[mask]TUNING

k = 4

TUNING K MEANS

k = 4

# Define the parameter grid

param_grid = {

'init': ['k-means++', 'random'],

'n_init': list(range(10, 200)),

'algorithm': ['auto', 'lloyd', 'elkan'],

'max_iter': list(range(100, 500)),

'tol': [1e-4, 1e-3, 1e-2, 1e-1, 1],

}

# Create a KMeans instance with a fixed number of clusters

kmeans = KMeans(n_clusters=k)

# Create a silhouette scorer

silhouette_scorer = make_scorer(silhouette_score)

# Create a RandomizedSearchCV instance

random_search = RandomizedSearchCV(kmeans,

param_grid,

n_iter=50,

cv=3,

random_state=594,

scoring=silhouette_scorer,

)

# Fit the RandomizedSearchCV instance

random_search.fit(df_pca_without_outliers)

# Get the best parameters

best_params_kmeans_4 = random_search.best_params_

# Print the best parameters

print(f'Best params for KMeans with k={k}:\n')

print(best_params_kmeans_4)Best params for KMeans with k=4:

{'tol': 0.0001, 'n_init': 129, 'max_iter': 238, 'init': 'k-means++', 'algorithm': 'elkan'}TUNING GMM

k = 4

# Define the parameter grid for GMM

param_grid_gmm = {

'n_init': list(range(1, 21)),

'covariance_type': ['full', 'tied', 'diag', 'spherical'],

'tol': [1e-3, 1e-4, 1e-5, 1e-6],

'reg_covar': [1e-6, 1e-5, 1e-4, 1e-3, 1e-2, 1e-1, 1],

'max_iter': list(range(100, 500))

}

# Create a GMM instance

gmm = GaussianMixture(n_components=k)

# Create a silhouette scorer

silhouette_scorer = make_scorer(silhouette_score)

# Create a RandomizedSearchCV instance for GMM

random_search_gmm = RandomizedSearchCV(gmm,

param_grid_gmm,

n_iter=50,

cv=3,

random_state=594,

scoring=silhouette_scorer,

)

# Fit the RandomizedSearchCV instance to your data

random_search_gmm.fit(df_pca_without_outliers)

# Get the best parameters for GMM

best_params_gmm_4 = random_search_gmm.best_params_

# Print the best parameters for GMM

print(f'Best params for GMM with k={k}:\n')

print(best_params_gmm_4)Best params for GMM with k=4:

{'tol': 1e-06, 'reg_covar': 1, 'n_init': 20, 'max_iter': 376, 'covariance_type': 'diag'}TUNING BIRCH

k = 4

# Define the parameter grid for BIRCH

param_grid_birch = {

'threshold': [0.1, 0.3, 0.5, 0.7, 0.9],

'branching_factor': list(range(20, 100))

}

# Create a BIRCH instance

birch = Birch(n_clusters=k)

# Create a silhouette scorer

silhouette_scorer = make_scorer(silhouette_score)

# Create a RandomizedSearchCV instance for BIRCH

random_search_birch = RandomizedSearchCV(birch,

param_grid_birch,

n_iter=50,

cv=3,

random_state=594,

scoring=silhouette_scorer,

)

# Fit the RandomizedSearchCV instance to your data

random_search_birch.fit(df_pca_without_outliers)

# Get the best parameters for BIRCH

best_params_birch_4 = random_search_birch.best_params_

# Print the best parameters for BIRCH

print(f'Best params for BIRCH with k={k}:\n')

print(best_params_birch_4)Best params for BIRCH with k=4:

{'threshold': 0.7, 'branching_factor': 51}k = 5

TUNING K MEANS

k = 5

param_grid = {

'init': ['k-means++', 'random'],

'n_init': list(range(10, 200)),

'algorithm': ['auto', 'lloyd', 'elkan'],

'max_iter': list(range(100, 500)),

'tol': [1e-4, 1e-3, 1e-2, 1e-1, 1],

}

# Create a KMeans instance with n clusters

kmeans = KMeans(n_clusters=k)

# Create a silhouette scorer

silhouette_scorer = make_scorer(silhouette_score)

# Create a RandomizedSearchCV instance

random_search = RandomizedSearchCV(kmeans,

param_grid,

n_iter=50,

cv=3,

random_state=594,

scoring=silhouette_scorer,

)

# Fit the RandomizedSearchCV instance

random_search.fit(df_pca_without_outliers)

# Get the best parameters

best_params_kmeans_5 = random_search.best_params_

# Print the best parameters

print(f'Best params for KMeans with k={k}:\n')

print(best_params_kmeans_5)Best params for KMeans with k=5:

{'tol': 0.0001, 'n_init': 129, 'max_iter': 238, 'init': 'k-means++', 'algorithm': 'elkan'}TUNING GMM

k = 5

# Define the parameter grid for GMM

param_grid_gmm = {

'n_init': list(range(1, 21)),

'covariance_type': ['full', 'tied', 'diag', 'spherical'],

'tol': [1e-3, 1e-4, 1e-5, 1e-6],

'reg_covar': [1e-6, 1e-5, 1e-4, 1e-3, 1e-2, 1e-1, 1],

'max_iter': list(range(100, 500))

}

# Create a GMM instance

gmm = GaussianMixture(n_components=k)

# Create a silhouette scorer

silhouette_scorer = make_scorer(silhouette_score)

# Create a RandomizedSearchCV instance for GMM

random_search_gmm = RandomizedSearchCV(gmm,

param_grid_gmm,

n_iter=50,

cv=3,

random_state=594,

scoring=silhouette_scorer,

)

# Fit the RandomizedSearchCV instance to your data

random_search_gmm.fit(df_pca_without_outliers)

# Get the best parameters for GMM

best_params_gmm_5 = random_search_gmm.best_params_

# Print the best parameters for GMM

print(f'Best params for GMM with k={k}:\n')

print(best_params_gmm_5)Best params for GMM with k=5:

{'tol': 1e-06, 'reg_covar': 1, 'n_init': 20, 'max_iter': 376, 'covariance_type': 'diag'}TUNING BIRCH

k = 5

# Define the parameter grid for BIRCH

param_grid_birch = {

'threshold': np.arange(0.05, 1.05, 0.05),

'branching_factor': list(range(20, 200))

}

# Create a BIRCH instance

birch = Birch(n_clusters=k)

# Create a silhouette scorer

silhouette_scorer = make_scorer(silhouette_score)

# Create a RandomizedSearchCV instance for BIRCH

random_search_birch = RandomizedSearchCV(birch,

param_grid_birch,

n_iter=50,

cv=3,

random_state=594,

scoring=silhouette_scorer,

)

# Fit the RandomizedSearchCV instance to your data

random_search_birch.fit(df_pca_without_outliers)

# Get the best parameters for BIRCH

best_params_birch_5 = random_search_birch.best_params_

# Print the best parameters for BIRCH

print(f'Best params for KMeans with k={k}:\n')

print(best_params_birch_5)Best params for KMeans with k=5:

{'threshold': 0.7500000000000001, 'branching_factor': 80}CODE TO PRODUCE FOLIUM VISUALISATIONS

def make_map(df):

def make_map(df):

# Producing an interactive visualisation of results with folium

m = folium.Map(location=[52.9548, -1.1581], zoom_start=12)

colors = ['green', 'blue', 'orange', 'red', 'black']

for index, row in df.iterrows():

fname = 'Example'

lname = 'Donor'

email = 'exampledonor@email.com'

total_don = row['Transactions_LifetimeGiftsAmount']

num_don = row['Transactions_LifetimeGiftsNumber']

avg_don = row['Transactions_AverageGiftAmount']

news = bool(row['Newsletter'])

monthly = bool(row['monthlyDonorMonths1to12'])

lat = row['Latitude']

long = row['Longitude']

dateoffirst = row['Transactions_DateOfFirstGift'].strftime('%d/%m/%Y')

dateoflast = row['Transactions_DateOfLastGift'].strftime('%d/%m/%Y')

active = row['activeMonths']

freq = row['DonationFrequency']

freq_active = row['DonationFrequencyActive']

label = row['ClusterLabel']

dist = row['DistanceFromEHSC']

# Add popups when a marker clicked on

popup_text = f'''

<div style="width: 200px; font-family: Arial; line-height: 1.2;">

<h4 style="margin-bottom: 5px;">{fname} {lname}</h4>

<p style="margin: 0;"><b>Total Donated:</b> £{total_don:.2f}</p>

<p style="margin: 0;"><b>Number of Donations:</b> {num_don}</p>

<p style="margin: 0;"><b>Average Donation:</b> £{avg_don:.2f}</p>

<br>\

<p style="margin: 0;"><b>First Recorded Donation:</b> {dateoffirst}</p>

<p style="margin: 0;"><b>Last Recorded Donation:</b> {dateoflast}</p>

<br>\

<p style="margin: 0;"><b>ActiveMonths:</b> {active}</p>

<p style="margin: 0;"><b>DonationFrequency</b> {freq:.2f}</p>

<p style="margin: 0;"><b>DonationFrequencyActive</b> {freq_active:.2f}</p>

<br>\

<p style="margin: 0;"><b>Subscribed to Newsletter:</b> {"Yes" if news else "No"}</p>

<p style="margin: 0;"><b>Current Monthly Donor:</b> {"Yes" if monthly else "No"}</p>

<br>\

<p style="margin: 0;"><b>Distance from EHSC:</b> {dist:.2f}km</p>

<br>\

<p style="margin: 0;"><b>Email:</b><br> {email}</p>

<br>\

<p style="margin: 0;"><b>ClusterLabel:</b><br> {label}</p>

</div>

'''

color = colors[label]

marker = folium.CircleMarker(

location=[lat, long],

radius=5,

color=color,

fill=True,

fill_color=color,

fill_opacity=0.7,

popup=popup_text

).add_to(m)

# Create a marker at EHSC

ehsc_html = '''<h4 style="margin-bottom: 5px;">Emmanuel House Support Centre</h4>

<a href="https://www.emmanuelhouse.org.uk/" target="_blank">https://www.emmanuelhouse.org.uk/</a>

<p>Emmanuel House is an independent charity that supports people who are homeless, rough sleeping, in crisis, or at risk of homelessness in Nottingham.</p>

'''

ehsc_coords = (52.95383, -1.14168)

marker = folium.Marker(location=ehsc_coords, popup=folium.Popup(ehsc_html))

m.add_child(marker)

return m

TUNED ENSEMBLES

k = 4

ENSEMBLE: k=4, UNTUNED

k = 4

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594),

GaussianMixture(n_components=k,

random_state=594),

Birch(n_clusters=k),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.32390613387277556# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

3 230

0 227

1 202

2 59

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)ENSEMBLE: k=4, KMEANS TUNED

k = 4

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594,

**best_params_kmeans_4),

GaussianMixture(n_components=k,

random_state=594),

Birch(n_clusters=k),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.2949886894806673# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

2 231

3 196

1 184

0 107

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)ENSEMBLE: k=4, KMEANS AND GMM TUNED

k = 4

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594,

**best_params_kmeans_4),

GaussianMixture(n_components=k,

random_state=594,

**best_params_gmm_4),

Birch(n_clusters=k),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.15953504480250802# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

2 327

0 219

1 155

3 17

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)ENSEMBLE: k=4, ALL TUNED

k = 4

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594,

**best_params_kmeans_4),

GaussianMixture(n_components=k,

random_state=594,

**best_params_gmm_4),

Birch(n_clusters=k,

**best_params_birch_4),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.21204320844898658# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

2 327

1 188

0 185

3 18

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)k = 5

ENSEMBLE: k=5, UNTUNED

k = 5

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594),

GaussianMixture(n_components=k,

random_state=594),

Birch(n_clusters=k),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.3549508024992924# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

0 224

3 188

1 165

4 76

2 65

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)ENSEMBLE: k=5, KMEANS TUNED

k = 4

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594,

**best_params_kmeans_5),

GaussianMixture(n_components=k,

random_state=594),

Birch(n_clusters=k),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.2949886894806673# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

2 231

3 196

1 184

0 107

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)ENSEMBLE: k=5, KMEANS & GMM TUNED

k = 5

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594,

**best_params_kmeans_5),

GaussianMixture(n_components=k,

random_state=594,

**best_params_gmm_5),

Birch(n_clusters=k),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.39211366933147423# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

2 269

1 175

4 148

0 81

3 45

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)ENSEMBLE: k=5, ALL TUNED

k = 5

# Drop 'ClusterLabel' if present from a previous run

# (Otherwise the 'ClusterLabel' feature would be used in the clustering)

df = drop_column(df, 'ClusterLabel')

df_pca_without_outliers = drop_column(df_pca_without_outliers, 'ClusterLabel')

# Create the clustering models

models = [

KMeans(n_clusters=k,

random_state=594,

**best_params_kmeans_5),

GaussianMixture(n_components=k,

random_state=594,

**best_params_gmm_5),

Birch(n_clusters=k,

**best_params_birch_5),

]

# Fit the models and predict the labels

labels = []

for model in models:

model.fit(df_pca_without_outliers)

if isinstance(model, GaussianMixture):

label = model.predict(df_pca_without_outliers) # For GMM, use predict to get hard labels

else:

label = model.labels_

labels.append(label)

# Combine the labels using a majority voting scheme

labels_ensemble = []

for i in range(len(df_pca_without_outliers)):

# Get the labels for the i-th data point from all models

labels_i = [labels_j[i] for labels_j in labels]

# Get the most common label

most_common_label = Counter(labels_i).most_common(1)[0][0]

labels_ensemble.append(most_common_label)

# Get and print avg silhouette score

silhouette_avg = silhouette_score(df_pca_without_outliers, labels_ensemble)

print("Average silhouette score of clustering:", silhouette_avg)Average silhouette score of clustering: 0.37188413554296684# Assign the ensemble labels to the dataframe

df_pca_without_outliers['ClusterLabel'] = labels_ensemble

df_pca_without_outliers['ClusterLabel'].value_counts()ClusterLabel

2 281

1 177

4 148

0 81

3 31

Name: count, dtype: int64# Producing interactive visualisation of clustering results with folium

geo = df.merge(df_pca_without_outliers[['ClusterLabel']], left_index=True, right_index=True, how='left')

geo = geo.dropna()

geo['ClusterLabel'] = geo['ClusterLabel'].astype(int)

make_map(geo)